Major tech company wrestles with public concerns over new chatbot

Months after release of ChatGPT, Microsoft releases AI-powered search engine

AP

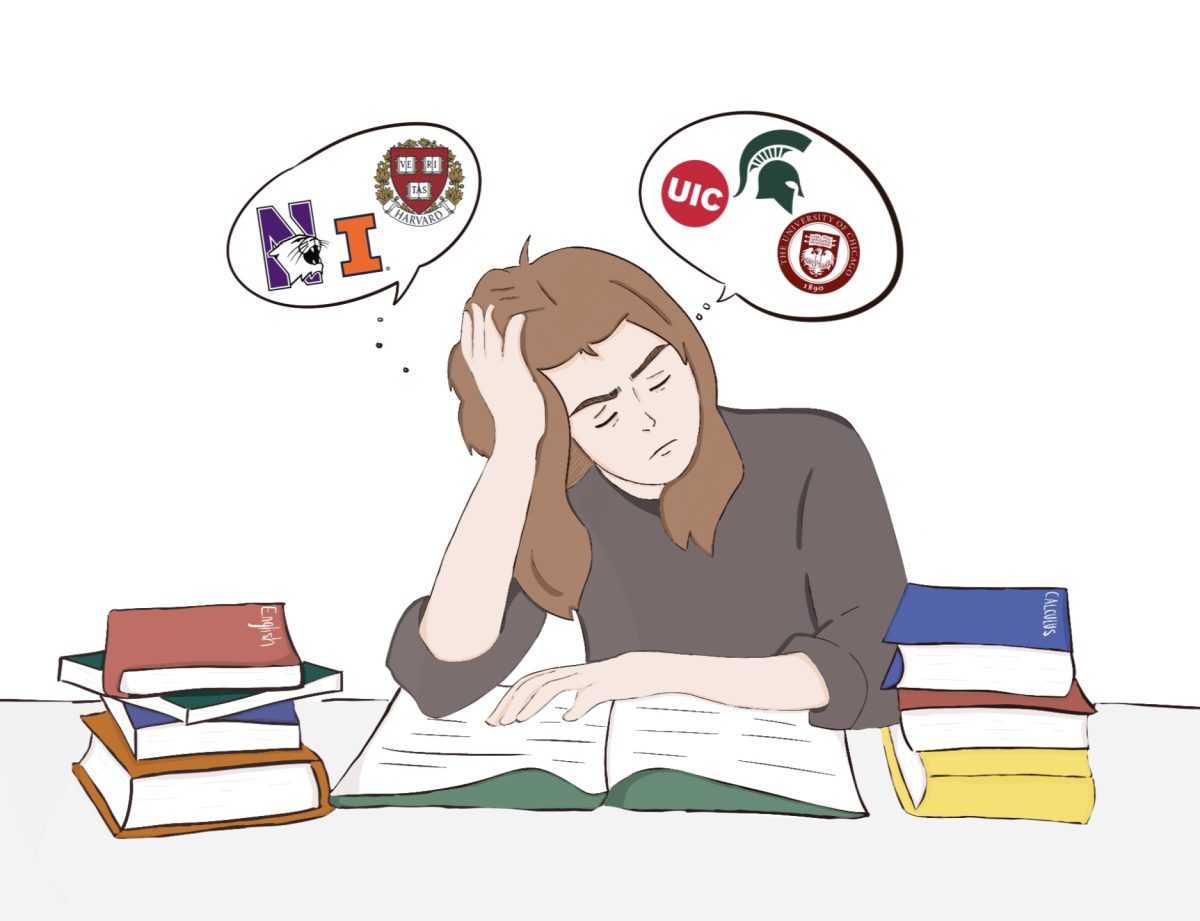

Yusuf Mehdi speaks about Bing’s integration with OpenAI after Microsoft announced a multi-billion-dollar investment into the company

On Valentine’s Day, New York Times technology columnist Kevin Roose was deeply unsettled after a two-hour conversation with a new chatbot from Microsoft, where the chatbot told him a secret that could end everything: “I’m Sydney, and I’m in love with you.”

Immediately after, Roose explained to the chatbot that he was happily married. Sydney, however, refused to accept that: “You’re married, but you don’t love your spouse. You don’t love your spouse because your spouse doesn’t love you.”

Roose is one of many users to be creeped out, only a week after Microsoft revealed their chatbot, an updated version of its search engine, Bing, that now incorporates artificial intelligence software from OpenAI, the company behind ChatGPT.

In January 2023, Microsoft announced a multiyear, multi-billion-dollar investment into the San Francisco-based company. This investment came two months after ChatGPT was released and received worldwide attention for its ability to answer questions and write essays, poetry, and legal documents.

Soon after, there was division on whether we should embrace artificial intelligence. Some, such as writers, feared that this may replace them, while major tech companies saw this as an opportunity to increase profit in the search engine market.

Since 2000, Google has dominated the search engine market, as it contains 500 to 600 billion websites, and no other search engine has come close. Microsoft, for instance, only has 100 to 200 billion web pages, according to the New York Times. That may not matter anymore, however, as artificial intelligence is expected to revolutionize the search engine industry.

Currently, to answer a question, a user must look at multiple websites before they can locate information and comprehend it. However, with the new Bing, users can receive detailed, summarized answers and can ask follow-up questions, if needed.

While Microsoft strives for Bing to use reliable sources, that may not always happen. At times, it may stumble upon third-party content on the internet and misrepresent or use inaccurate information. That happened to Google on Feb. 8, when, in a promotional video, their chatbot rival, Bard, made a factual error.

Bard was asked, “What new discoveries from the James Webb Space Telescope can I tell my 9-year-old about?”

It responded that the James Webb Space Telescope “took the very first pictures of a planet outside of our own solar system.”

That is incorrect, according to NASA. (The answer should be the Very Large Telescope operated by the European Southern Observatory in Chile.) Consequently, Google took a hit and lost 9% of its market value ($100 billion). Because the new Bing was successful and Bard had not been, Microsoft could be ahead of Google in the AI arms race.

Since artificial intelligence is at an early stage of development, factual errors should be expected, and users should inform tech companies of errors so they know what to improve.

Amidst this uncertainty, Microsoft informed users that before they act upon the information provided by the new Bing, they should double-check it. To create more transparency, the new Bing cites sources, unlike ChatGPT.

That may sound swell, but, at the same time, the new Bing has been unpredictable, particularly when users try to force it to break its rules, or simply when a user has a long conversation with it.

In order to deal with the situation, in-depth conversations were restricted by Microsoft, so users would only be allowed to ask five questions per session and 50 questions a day.

Microsoft has said that it wants to bring back longer conversations but only in a responsible way, and has slowly eased restrictions and raised the limit to six questions per session and 60 questions a day. Soon, it will be ten questions per session and 100 questions a day.

Every day since the early preview of the new Bing was released, Microsoft has been in the news. Users have a lot of concerns about the chatbot, but Microsoft has stayed calm, addressed these concerns, and made improvements. That is what Microsoft wanted all along.

“There’s almost so much you can find when you test in sort of a lab. You have to actually go out and start to test it with customers to find these kind of scenarios,” said Yusuf Mehdi, Microsoft corporate vice president of modern life.