Facial recognition: a white man’s world

Facial recognition displays racism in its misidentifying of Black women

AP

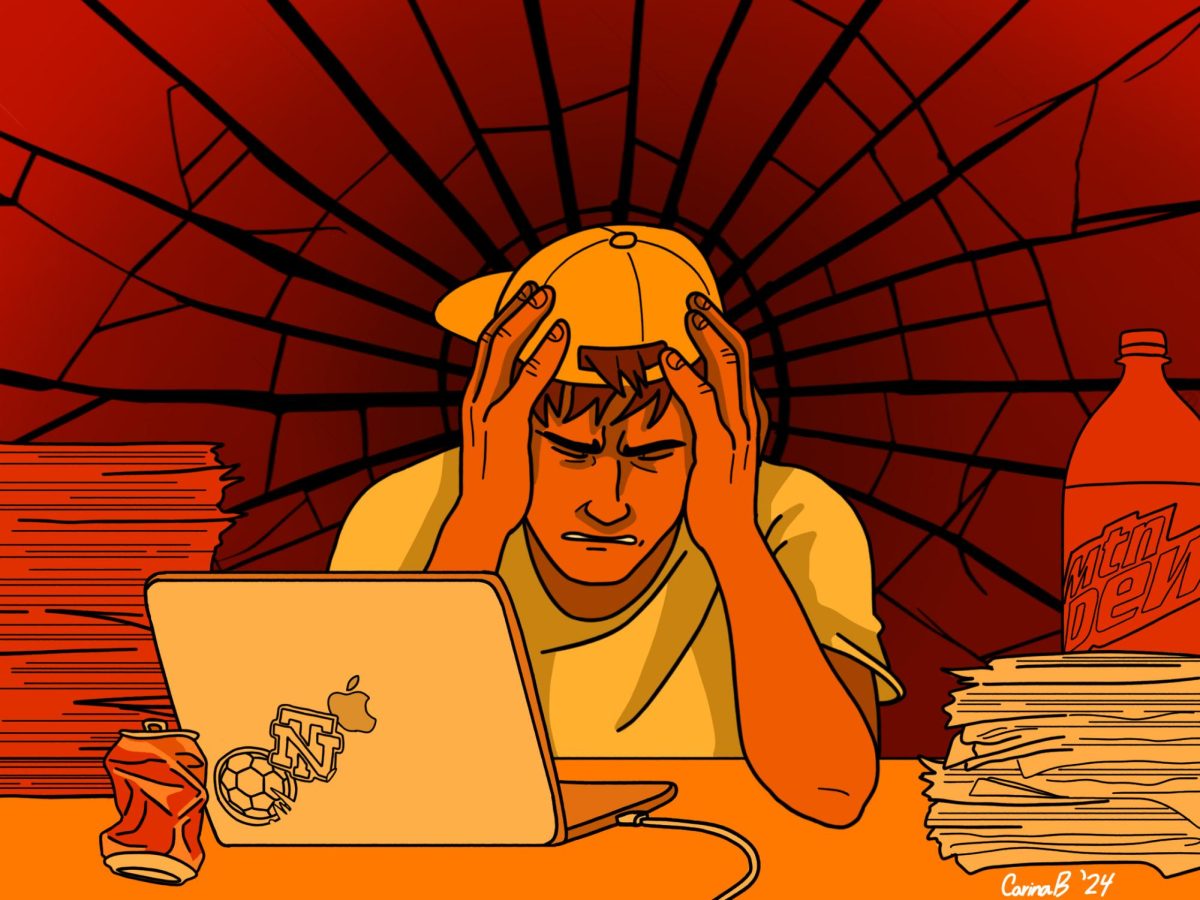

MIT facial recognition researcher Joy Buolamwini stands for a portrait. Facial recognition misidentifies Black people due to bias in input data

In 2020, IBM, Microsoft, and Amazon announced that they would no longer research, develop, and sell their facial recognition technology to law enforcement in the U.S. In creating computer-generated filters to transform faces into numerical expressions, facial recognition marked the humanlike ability of computers to identify people. The problem? Well, it just so happened that computers have a penchant for racism too.

This racism stems from the way facial recognition software is designed. Intended for police work, facial recognition was trained by using mug-shots to make predictions in identifying suspects. Because of discriminatory law enforcement practices, Black Americans are more likely to be incarcerated for minor crimes than White Americans. Facial recognition systems associated Black people with the presence they held in its mug-shot database, which led to the disproportionate arrests of innocent people.

The NYPD, for instance, cataloged 42,000 “gang-affiliates” using facial recognition technologies trained in these mug-shot databases, but there was no evidence to suggest that many of those cataloged were actually affiliated with gangs. In fact, of the Black people cataloged by the NYPD, 99% were shown to have no gang affiliation at all.

Courtesy of this mug-shot training, facial recognition systems don’t solely fail random people, but also congressmen. The American Civil Liberties Union (ACLU) conducted an assessment of Amazon’s facial recognition software, Rekognition, with 28 members of Congress. Rekogniton incorrectly matched those of color with mugshot images.

And Black Americans weren’t just mistaken for mug-shots. In June 2015, 22-year-old Brooklyn software engineer, Jacky Alciné, had a friend send him an internet link which directed Alciné to the recent Google Photos service filled with pictures he and his friend took. Google Photos had an embedded facial recognition system which could analyze images and sort them into folders (such as “dog,” or “birthday”) based on the objects the service recognized.

Alciné noticed a folder labeled “gorilla,” which didn’t make sense as none of the pictures they took had gorillas in them. When he clicked into the folder, he found more than 80 photos of his friend, who is Black.

Again, the issue traced back to how the system was developed. Google Photos used a mathematical system called a “neural network” where by analyzing multiple images of a gorilla, in this case, the system could learn to recognize a gorilla. It falls under the engineer’s responsibility, however, to choose the right data for these systems to analyze. Neural networks must be carefully designed from the start. If there are cracks in the system early on, these can be nearly impossible to undo.

There haven’t been excessive instances of incorrectly categorizing Black people, whether that be mug-shots or gorillas, because often, facial recognition systems don’t even recognize a Black face.

Computer scientist Joy Buolamwini was working on a detection system project at MIT in hopes of getting a facial recognition system to recognize her face. No matter how many times she tried, the system couldn’t detect her– that is, until out of frustration she put on a white mask sitting on her desk.

“Black Skin, White Masks,” Buolamwini remarked in an interview, referencing Frantz Fanon’s 1952 historical critique of racism. The native cultural origins of the “Black Skin” were seen as inferior. As Black people found jobs and established themselves at higher tiers with whiter names, mastering the language of their colonizers, they were said to have had “White Masks.” Society defined itself as white then and, thanks to facial recognition, continues to do so now.

Specifically, this construct caters to White men, and fails Black women the most. In the 2018 “Gender Shades” project, five facial recognition algorithms (Microsoft, Face++, IBM, Amazon, and Kairos) were tested for accuracy. Groups of people were divided into four categories: darker-skinned females, darker-skinned males, lighter-skinned females, and lighter-skinned males. All five algorithms were least accurate on darker-skinned females with an average of a 31% error rate. The error rates for dark-skinned females were 34% higher than those of light-skinned males.

The lack of insight on such blatant racism displayed by facial recognition software can be attributed to two ideas, the first being the homogenous crowd of AI pioneers. There is very little Black presence in groups building novel software, and with that limited presence comes limited, if any, insight on these glaring problems. Systems such as facial recognition were trained through the perspective of White Americans– or really, White male Americans.

This leads to the second issue in that it can be uncomfortable to address these racial biases in the software. It’s easier to brush over these issues and defend the overall ingenuity instead. People are reluctant to even fathom fixing the problems.

This reluctance to acknowledge and address racial bias has always existed, from Fanon’s 1952 critique in response to the over incarceration of Black males and has managed to slither its way into the world of AI facial recognition. Just as how neural networks work, however, it is imperative to mend the cracks early on before they fester into something indestructible.